Request Access For A Free Trial

Processing...

Forecasting and Planning

Forecasting and Planning

Marketing and Sales AI

Marketing and Sales AI

Anomaly Detection

Anomaly Detection

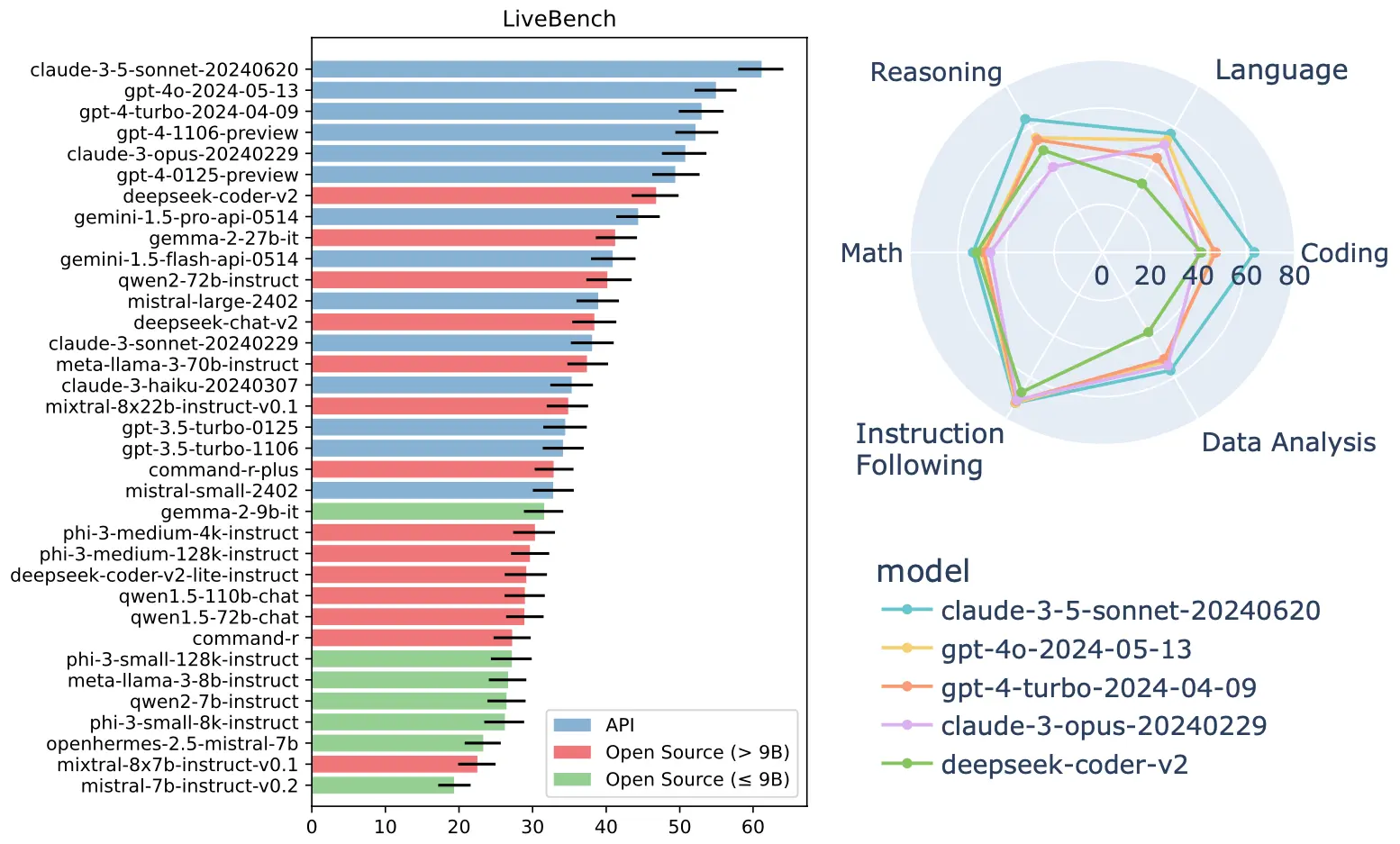

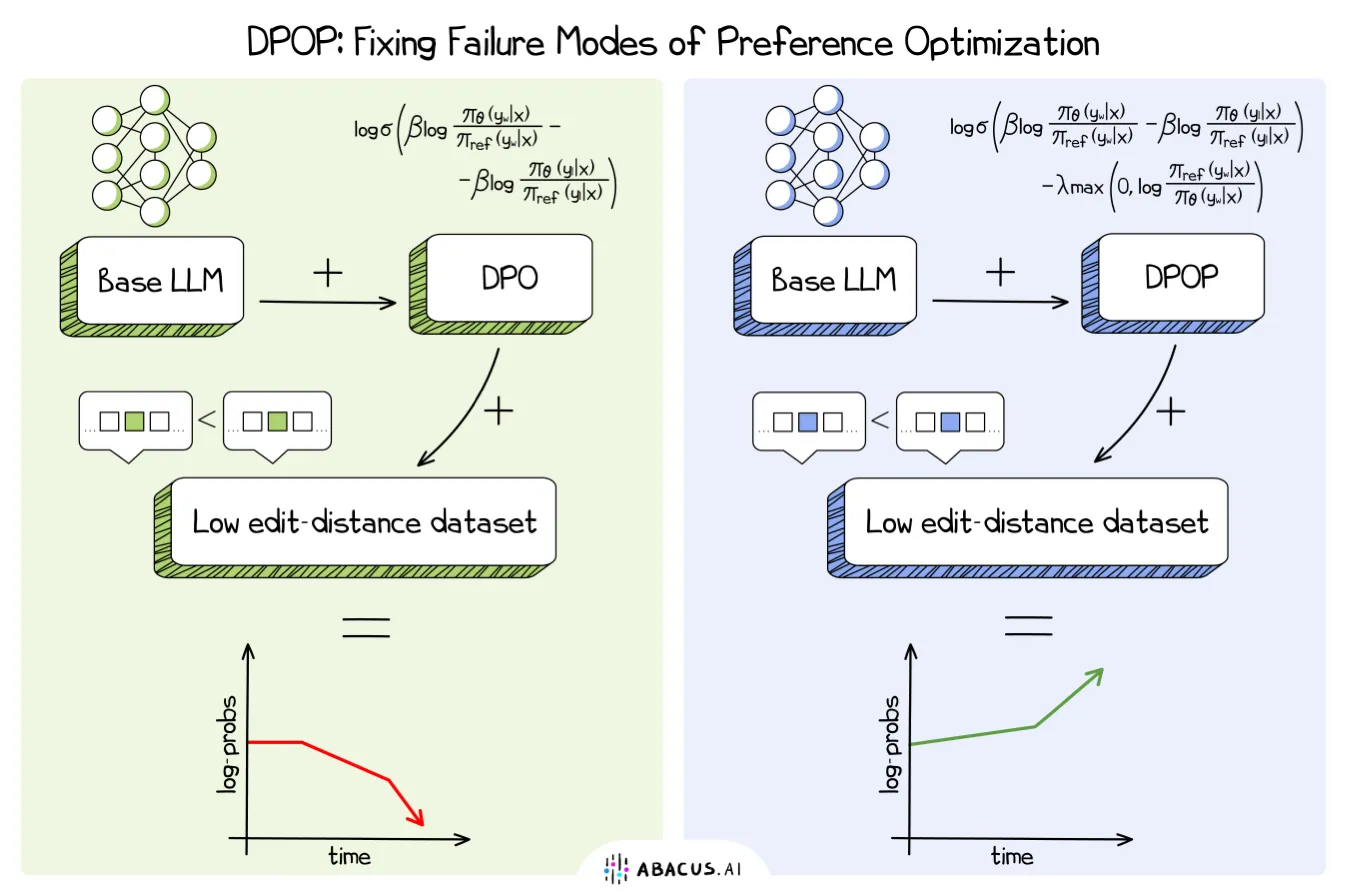

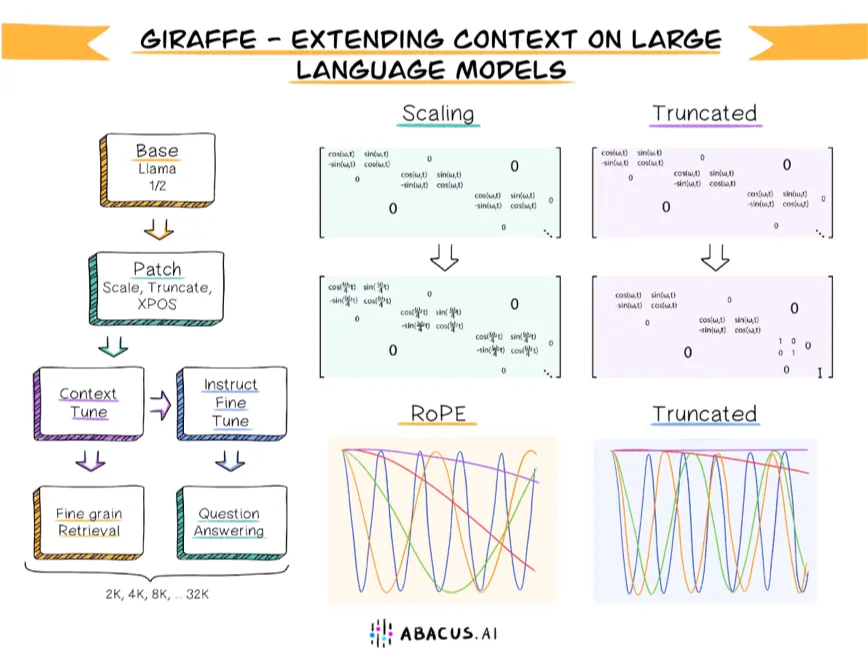

Foundation Models

Foundation Models

Language AI

Language AI

Fraud and Security

Fraud and Security

Structured ML

Structured ML

Vision AI

Vision AI

Personalization AI

Personalization AI

Processing...

Thanks for requesting access to Abacus.AI, we will get back to you shortly